by Ed Nuhfer, California State Universities (retired)

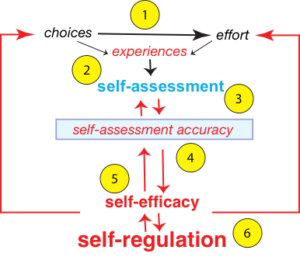

Self-assessment is a metacognitive skill that employs both cognitive competence and affective feelings. After over two decades of scholars’ misunderstanding, misrepresenting, and deprecating self-assessment’s value, recognizing self-assessment as valid, measurable, valuable, and connected to a variety of other beneficial behavioral and educational properties is finally happening. The opportune time for educating to strengthen that ability is now. We synthesize this series into four concepts to address when teaching self-assessment.

Teach the nature of self-assessment

Until recently, decades of peer-reviewed research popularized a misunderstanding of self-assessment as described by the Dunning-Kruger effect. The effect portrayed the natural human condition as most people overestimating their abilities, lacking the ability to recognize they do so, the most incompetent being the most egregious offenders, and only the most competent possessing the ability to self-assess themselves accurately.

From founding to the present, that promotion relied on mathematics that statisticians and mathematicians now recognize as specious. Behavioral scientists can no longer argue for “the effect” by invoking the unorthodox quantitative reasoning used to propose it. Any salvaging of “the effect” requires different mathematical arguments to support it.

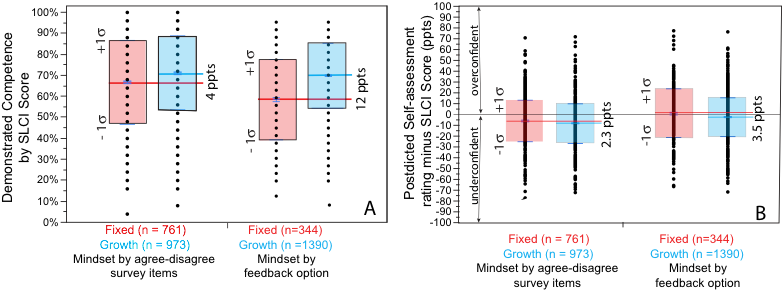

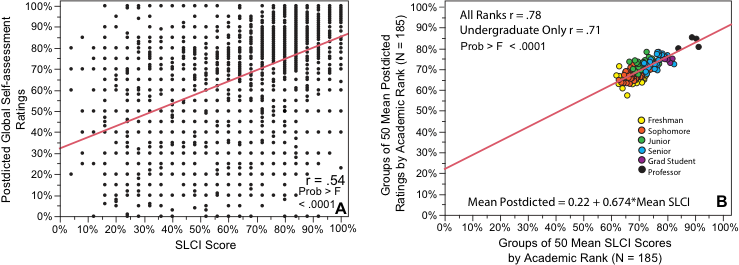

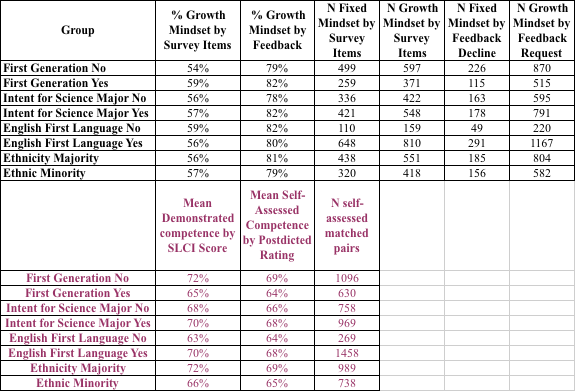

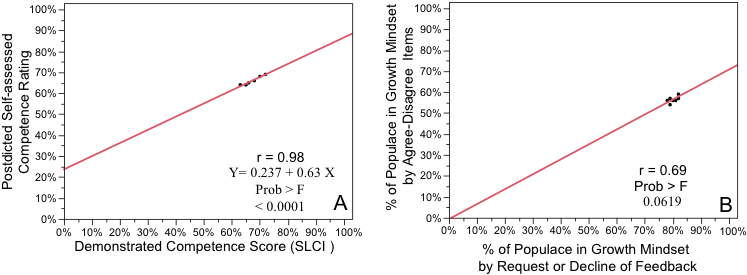

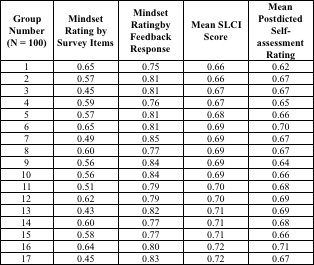

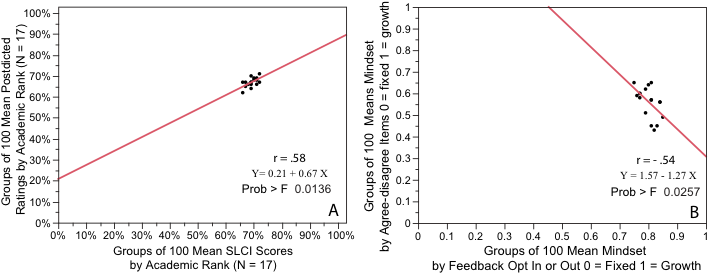

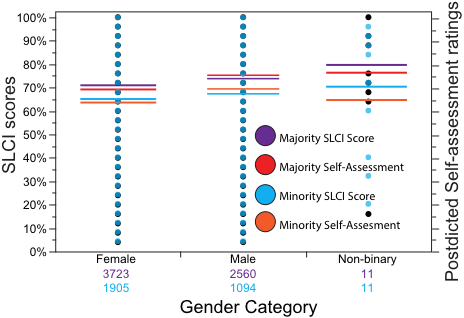

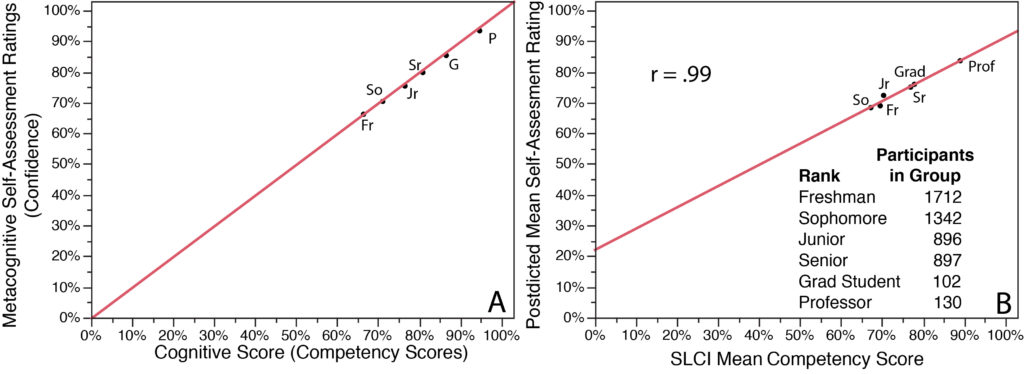

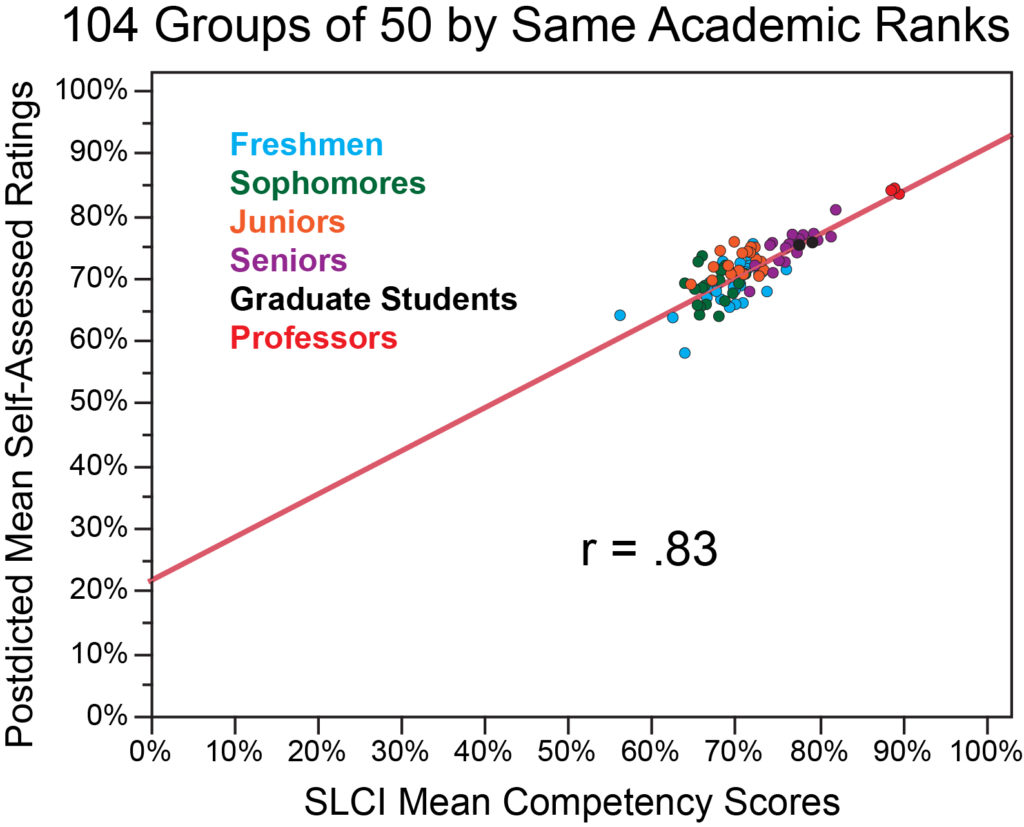

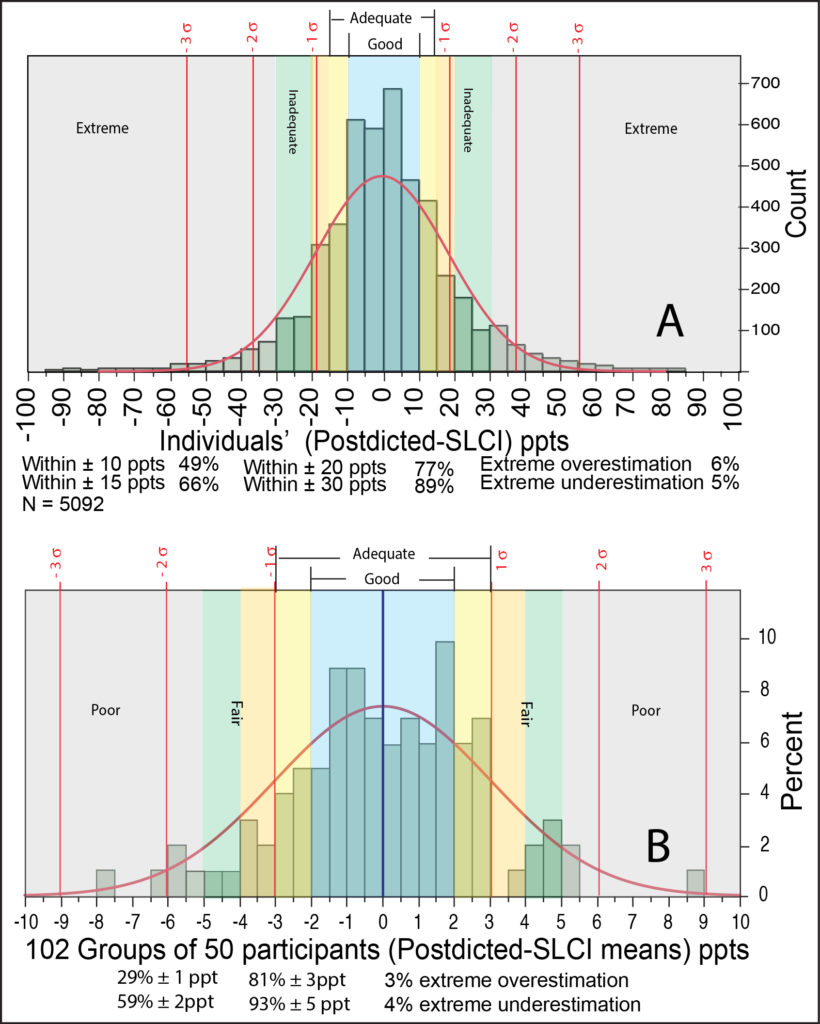

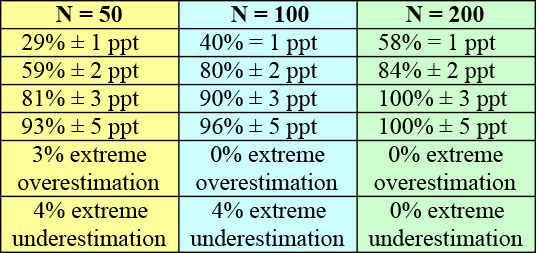

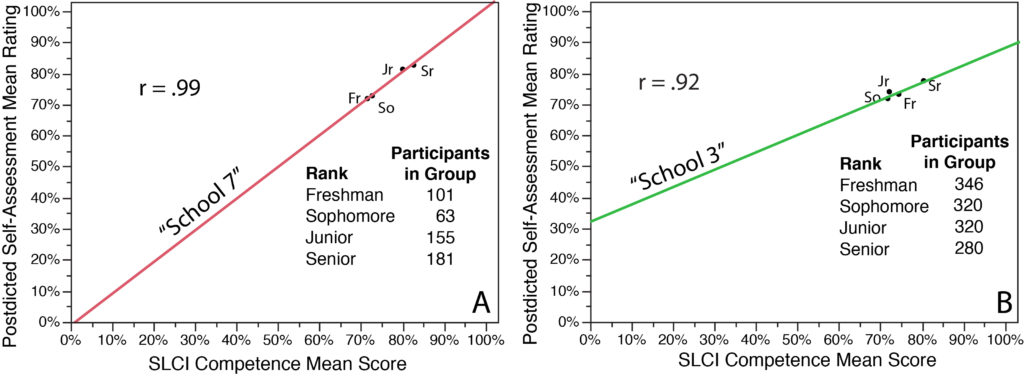

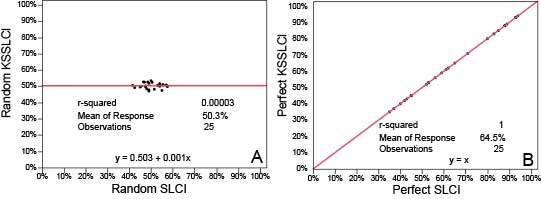

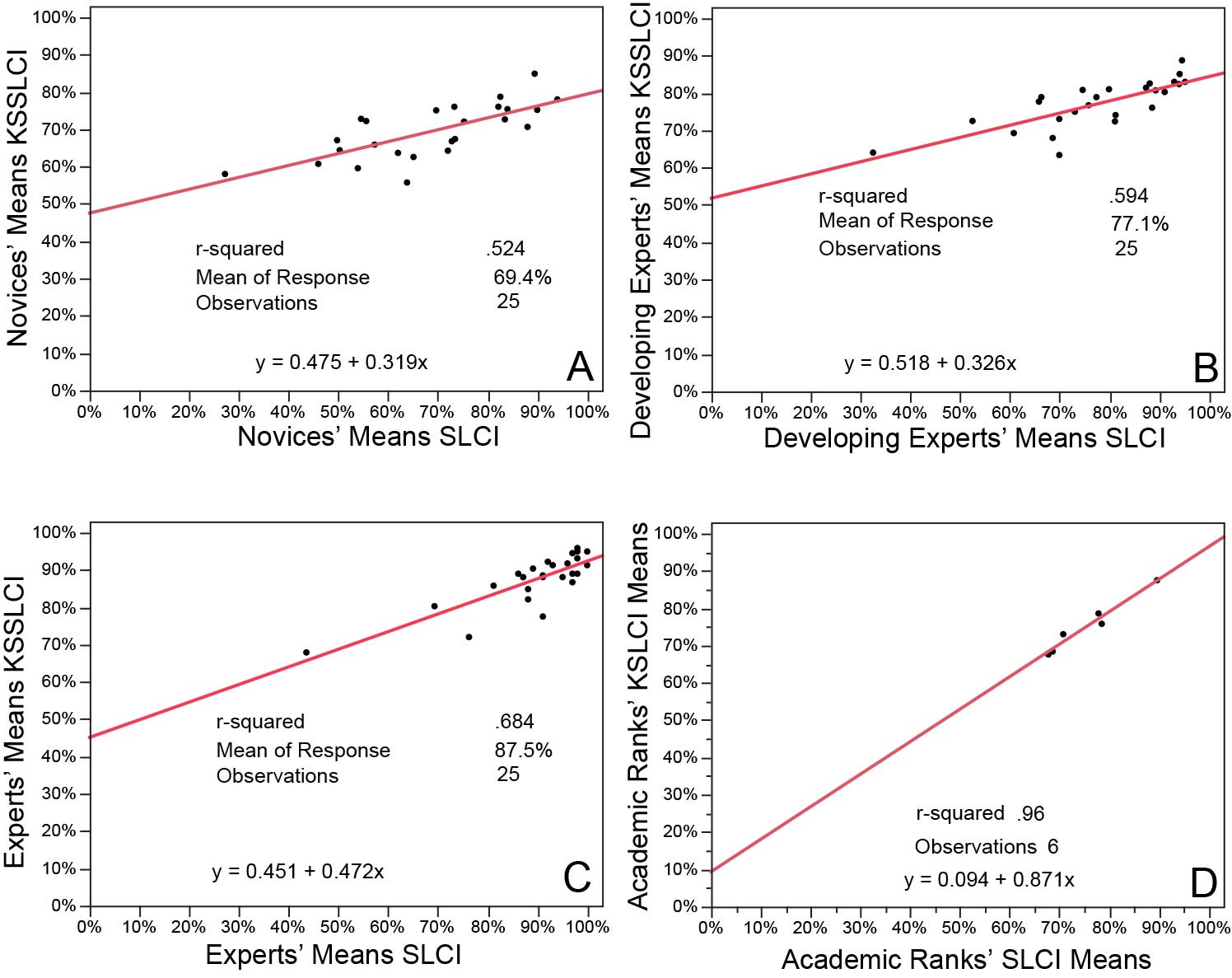

Quantitative approaches confirm that a few percent of the populace are “unskilled and unaware of it,” as described by “the effect.” However, these same approaches affirm that most adults, even when untrained for self-assessment accuracy, are generally capable of recognizing their competence or lack thereof. Further, they overestimate and underestimate with about the same frequency.

Like the development of higher-order or “critical” thinking, the capacity for self-assessment accuracy develops slowly with practice, more slowly than required to learn specific content, and through more practice than a single course can provide. Proficiency in higher-order thinking and self-assessment accuracy seem best achieved through prolonged experiences in several courses.

During pre-college years, a deficit of relevant experiences produced by conditions of lesser privilege disadvantages many new college entrants relative to those raised in privilege. However, both the Dunning-Kruger studies and our own (https://books.aosis.co.za/index.php/ob/catalog/book/279 Chapter 6) confirm that self-assessment accuracy is indeed learnable. Those undeveloped in self-assessment accuracy can become much more proficient through mentoring and practice.

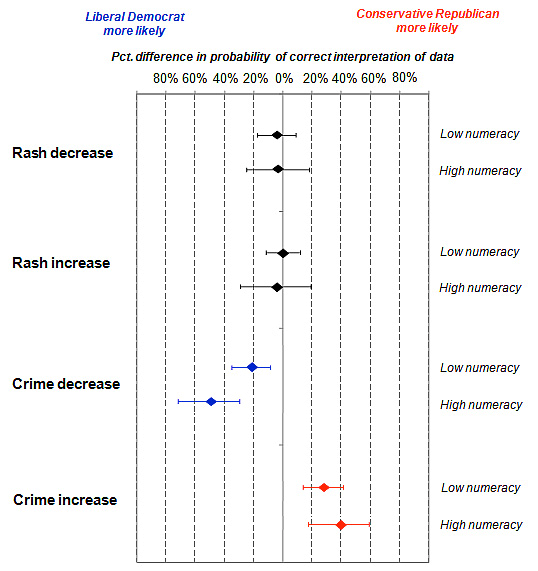

Teach the importance of self-assessment

As a nation that must act to address severe threats to well-being, such as healthcare, homelessness, and climate change, we have rarely been so incapacitated by polarization and bias. Two early entries on bias in this guest-edited series explained bias as a ubiquitous survival mechanism in which individuals relinquish self-assessment to engage in modern forms of tribalism that marginalize others in our workplaces, institutions, and societal cultures. Marginalizing others prevents holding the needed consensus-building conversations between diverse groups that bring creative solutions and needed action.

Relinquishing metacognitive self-assessment to engage in bias obscures perceiving the impacts and consequences of what one does. Developing the skill to exercise self-assessment and use evidence, even under peer pressure not to do so, seems a way to retain one’s perception and ability to act wisely.

Teach the consequences of devaluing self-assessment

The credibility “the effect” garnered as “peer-reviewed fact” helped rationalize the public’s tolerating bias and supporting hierarchies of privilege. A quick Google® search of the “Dunning Kruger effect” reveals widespread misuse to devalue and taunt diverse groups of people as ignorant, unskilled, and inept at recognizing their deficiency.

Underestimating and disrespecting other peoples’ abilities is not simply innumerate and dismal; it cripples learning. Subscribing to the misconception disposes the general populace to avoid trusting in themselves, in others who merit trust, and to dismiss implementing or even respecting effective practices developed by others presumed to be inferiors. It discourages reasoning from evidence and promotes unfounded deference to “authority.” Devaluing self-assessment encourages individuals to relinquish their autonomy to self-assess, which weakens their ability to resist being polarized by demagogues to embrace bias.

Teach self-assessment accuracy

As faculty, we have frequently heard the proclamation “Students can’t self-assess.” Sadly, we have yet to hear that statement confronted by, “So, what are we going to do about it?”

Opportunities exist to design learning experiences that develop self-assessment accuracy in every course and subject area. Knowledge surveys, assignments with required self-assessments, and post-evaluation tools like exam wrappers offer straightforward ways to design instruction to develop this accuracy.

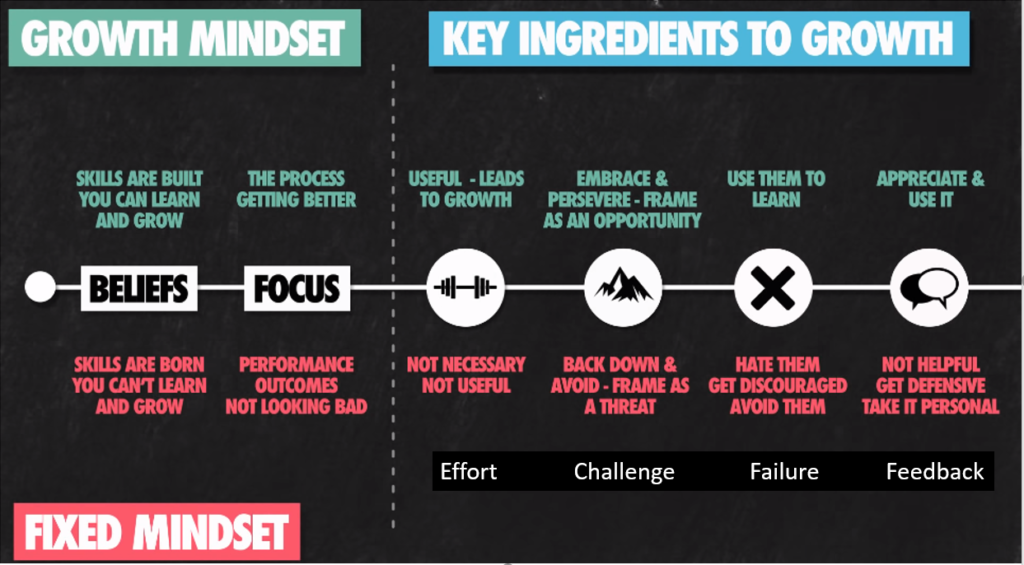

Given the current emphasis on the active learning structures of groups and teams, teachers easily mistake these as the sole domains for active learning and deprecate study alone. The interactive engagements are generally superior to the conventional structure of lecture-based classes for cognitive mastery of content and skills. However, these structures seldom empower learners to develop affect or recognize the personal feelings of knowing that come with genuine understanding. Those feelings differ from those that rest on shallow knowledge and often launch the survival mechanism of bias at critically inopportune times.

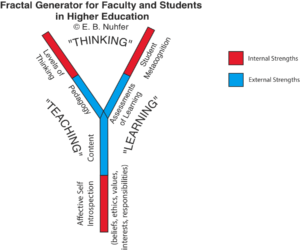

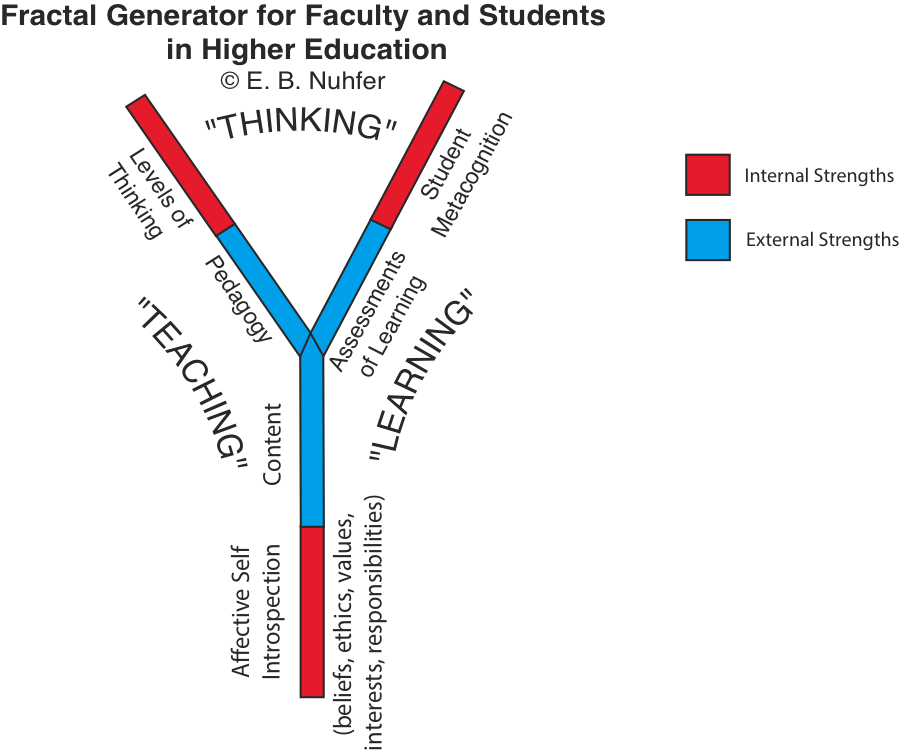

Interactive engagement for developing cognitive expertise differs from the active engagement in self-assessment needed to empower individuals to direct their lifelong learning. When students employ quiet reflection time alone to practice self-assessment by enlisting understanding for content for engaging in knowing self, this too is active learning. Ability to distinguish the feeling of deep understanding requires repeated practices in such reflection. We contend that active learning design that attends to both cognition and affect is superior to design that attends only to one of these.

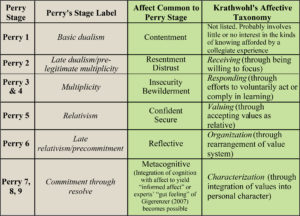

To us, John Draeger was particularly spot-on in his IwM entry, recognizing that instilling cognitive knowledge alone is insufficient as an approach for educating students or stakeholders within higher education institutions. Achievement of successful outcomes depends on educating for proficiency in both cognitive expertise and metacognition. In becoming proficient in controlling bias, “thinking about thinking” must include attention to affect to recognize the reactive feelings of dislike that often arise when confronting the unfamiliar. These reactive feelings are probably unhelpful to the further engagement required to achieve understanding.

The ideal educational environment seems one in which stakeholders experience the happiness that comes from valuing one another during their journey to increase content expertise while extending the knowing of self.