by Dr. Scott Santos, faculty member who became Chair of the Department of Biological Sciences in 2018; thus, he was thrust into leadership of the curricular redesign project.

In the final post of “The Evolution of Metacognition in Biological Sciences” guest series, Dr. Scott Santos shares his experience of moving from a faculty member in the department when the process of improving metacognition in the department began, to becoming chair and suddenly in a position to lead it. He also shares key lessons we learned over the course of the project and looks ahead to what’s next for the Biology department.

Learning about Metacognition

“Metacognition…Huh? What’s that?!” is what popped to mind the first time I came across that word in an email announcing that our department would be investigating ways to integrate it into courses at our 2017 Auburn University (AU) Department of Biological Sciences (DBS) Annual Faculty Retreat. Testimony to my naïveté on metacognition at the time comes from the fact that the particular email announcing the above is the first containing that specific word among 100,000+ correspondences dating back to 2004 when I started as a faculty member.

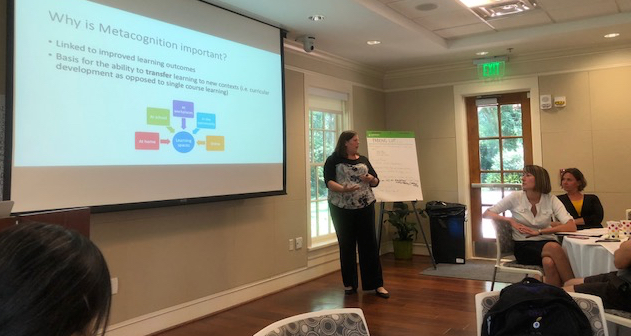

The email mentioning metacognition prompted me to spend a few minutes researching the word and, not surprisingly, discovering a wealth of internet resources. One of the most useful I found among these came from the Center for Teaching at fellow Southeastern Conference school, Vanderbilt University, where it was defined as “…. simply, thinking about one’s thinking” (Chick, 2015). I found this interesting since it reminded me of a recurring comment I have heard over the years amongst individuals who have successfully defended their Ph.D. dissertations, namely that one’s defense makes you realize how much you know about one particular area of knowledge while realizing how little you know about everything else.

The point that jumped out at me concerning this potential analogy was that, if it represented a genuine example of metacognition, it evolves in an individual over multiple years as they experience the trials and tribulations (as well as rewards and eventual success) associated with obtaining a terminal degree. Ambitiously, we were taking on the challenge of attempting to instill in early-career students an awareness and recognition of their strengths and weaknesses across the spectrum of learning, writing, reading, etc. As you can imagine, this was my first indicator that we had some work to do.

Educating Our Department

So how did the DBS faculty at AU approach this seemingly daunting task of bringing metacognition “to the masses”?

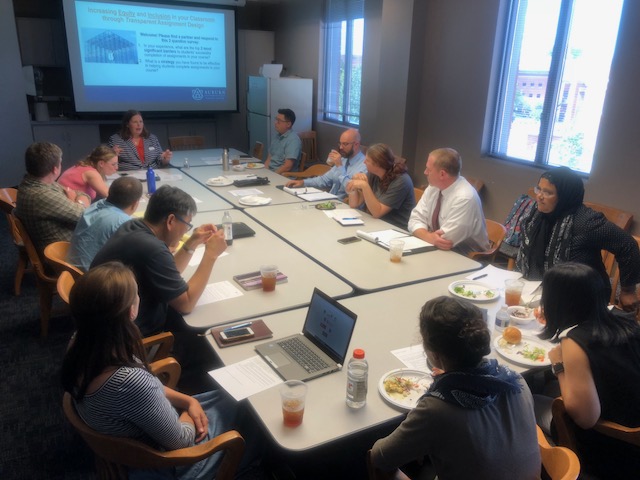

Firstly, our previous departmental leadership had the foresight to start the process by having the retreat facilitated by highly-qualified individuals. Specifically, Dr. Ellen Goldey (currently Dean, Wilkes Honors College, Florida Atlantic University; formerly Department Chair, Wofford College, SC) and Dr. April Hill (Chair, Department of Biology, University of Richmond) were recruited as two nationally-recognized leaders involved in the National Science Foundation (NSF)-funded Vision and Change (V&C) Report (Brewer & Smith 2011) to conduct a workshop that included integration of metacognition into our curricula. This proved highly useful to having our faculty begin to wrap our collective minds around what metacognition was (and could be) along with how we might begin approaching its integration into our existing and future courses. This has been followed up by general faculty meetings, and subcommittee meetings such as those of the DBS Curriculum Committee, which have occurred at regular intervals to undertake this process.

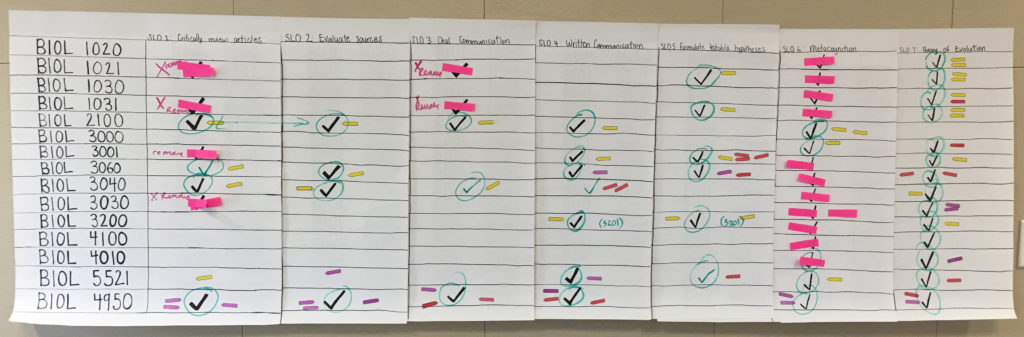

Overall, I am happy to report that we have made some significant progress in this area, including holding specialized workshops on the topic and discussing approaches to incorporate metacognitive prompts into midterms, finals, and surveys of undergraduate student research experiences that collect responses for future qualitative analyses, for integration of metacognition development and assessment into our budding ePortfolio initiative, and other activities (like this blog series). However, these modest successes have not come without challenges: as our “metacognition massacre” experience taught us, it takes significant levels of time and energy for such efforts to come to fruition and to seed and foster support for these efforts among the faculty charged with bringing metacognition into the classroom.

Key Insights

It has now been several years since AU DBS started our initiatives with metacognition, and during this time I have transitioned from an individual faculty member “in the trenches” to Chair of the department and thus charged with “leading the troops.” While I would be well-off financially if I had received a nickel for every time I have been offered “congratulation, and condolences” in the year and a half since becoming Chair, it has given me a new and different perspective on our metacognitive efforts:

- First, a passionate and dedicated team is needed for initiatives like this to prosper and we, as a department, have been fortunate to have that in the form of our AU colleagues who are also contributing blogs in this series. Importantly, they belong to multiple units outside DBS, thus bringing the needed expertise and perspective that we lacked or might miss, respectively. We are greatly indebted to them, and departmental chairs and heads interested or intending to start similar initiatives would be wise to establish and cultivate such collaborations early in the process. It is very helpful to have expert advice when tackling issues that are unfamiliar to most of the faculty.

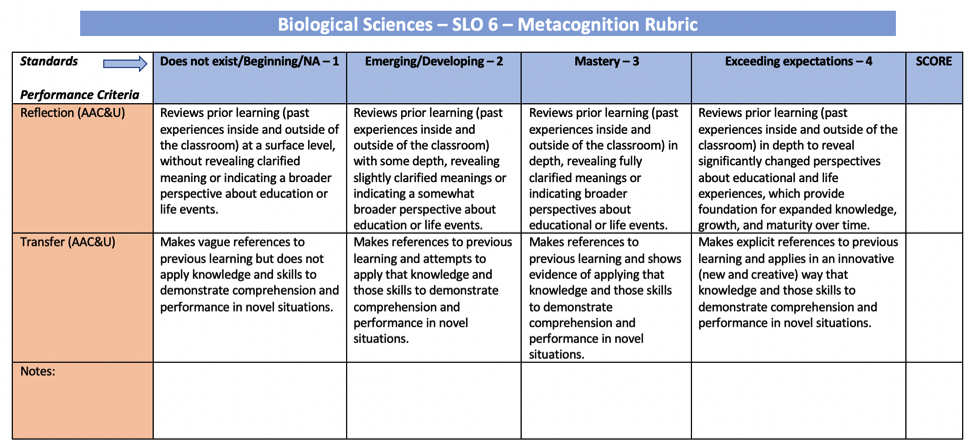

- Second, working with your faculty on understanding what metacognition is, along with defining expectations and assessment for initiatives around it, are paramount for your department’s immediate success with implementing activities from such efforts. In our case, the fact that many AU DBS faculty wrestled with the concept of metacognition meant that we had to invest more time for calibration before discussion could move forward.

- Third, the significance of solicitating undergraduate student participation during the development and implementation stages of the process should not be undervalued since they are the constituents who our efforts are ultimately targeting and thus deserve a voice at the table. Although our posts in this series have highlighted inflection points for the faculty as we moved our curriculum toward more metacognition, it is critical to note that we involved students as partners throughout the process. Some strategies we used include organizing student focus groups led by facilitators outside the department, conducting surveys, and inviting students to some meetings and department retreats.

Importantly, this should not be considered an exhaustive list and instead should serve as a general guide of issues to consider from someone who has had an opportunity to both witness and participate in the process from the departmental faculty and leadership perspectives.

Looking Toward the Future

What does the future hold for AU DBS when it comes to metacognition? On one hand, we will continue in the short-term to implement the initiatives described above while being opportunistic in improving them, which we consider to be a strategy consistent with the current stage of our efforts to develop metacognitive abilities in students enrolled in our programs. On the other hand, the long-term forecast, at least from the departmental standpoint, is more amorphous, with reasons for this including our need to involve a large number of newly recruited faculty. We look forward to new directions and possibilities as we learn about new strategies from our colleagues, though we recognize the need to balance and maintain synergy between departmental undergraduate and graduate programs in the face of limited resources.

Finally, a key element for metacognition highlighted by Vanderbilt’s Center of Learning is “recognizing the limit of one’s knowledge or ability and then figuring out how to expand that knowledge or extend the ability.” Given that, I would like to think that Auburn’s Department of Biological Sciences itself is attempting to be metacognitive in its approach to preparing and fostering metacognition in students, and it will be interesting to see how our current efforts evolve in the future.

References:

Chick, N. (2015). Metacognition: Thinking about one’s thinking. Vanderbilt University-The Centre for Teaching.

Brewer, C. A., & Smith, D. (2011). Vision and change in undergraduate biology education: a call to action. American Association for the Advancement of Science, Washington, DC.